The Smartest, Easiest to Use Marketing Automation Platform

The Only Company Focused Solely on Marketing Automation Platforms

Marketing automation is the engine that drives modern digital marketing. But across the board, leading digital marketing automation platforms have stalled out. Big tech behemoths have bought up the best platforms and used them as loss leaders to sell their all-in-one, best-in-none solutions.

We believe there’s a better way. We’re the only marketing automation platform that focuses entirely on making better, easier, more intuitive solutions to help automate modern marketing departments and sales teams.

Intelligence

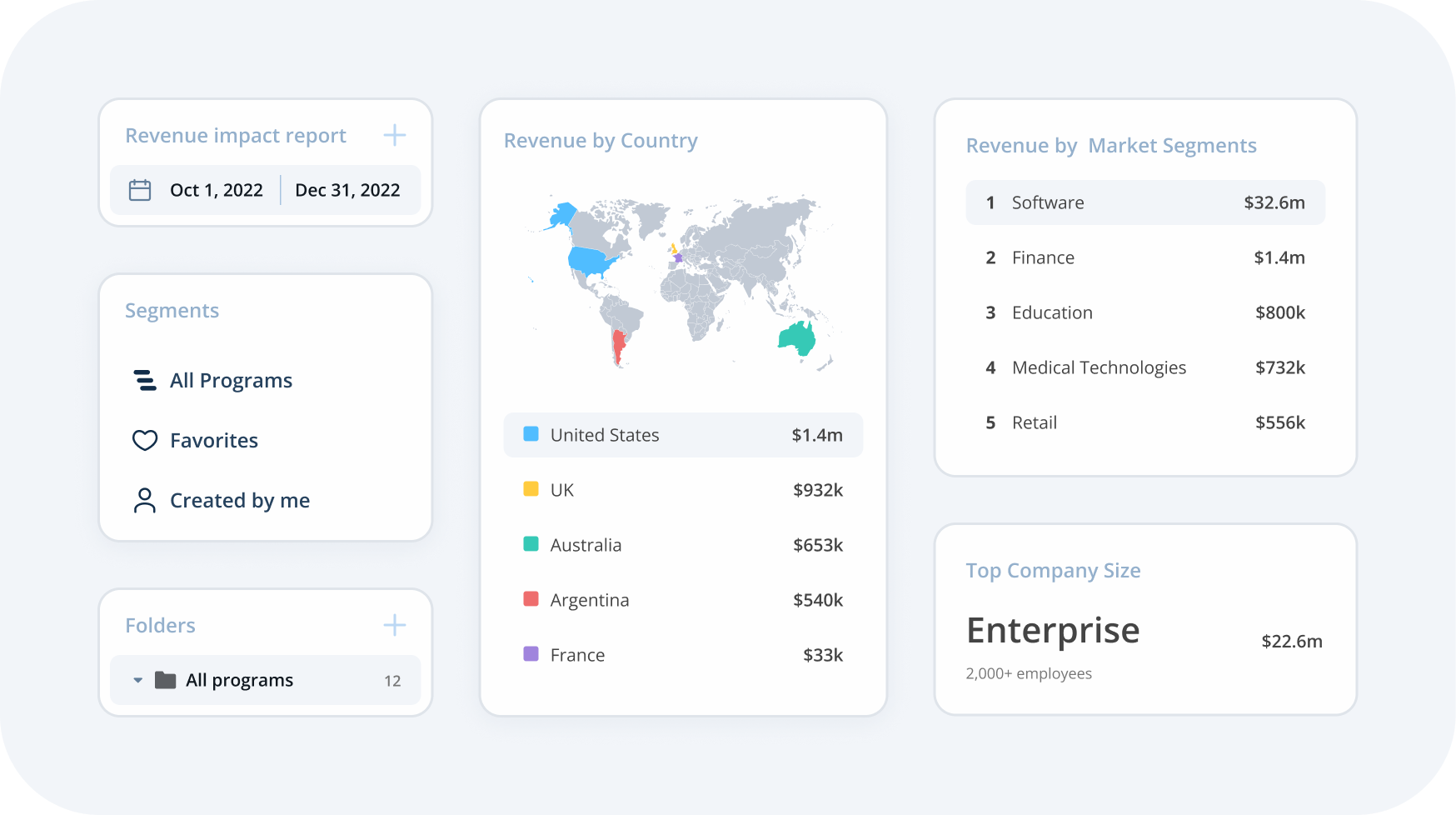

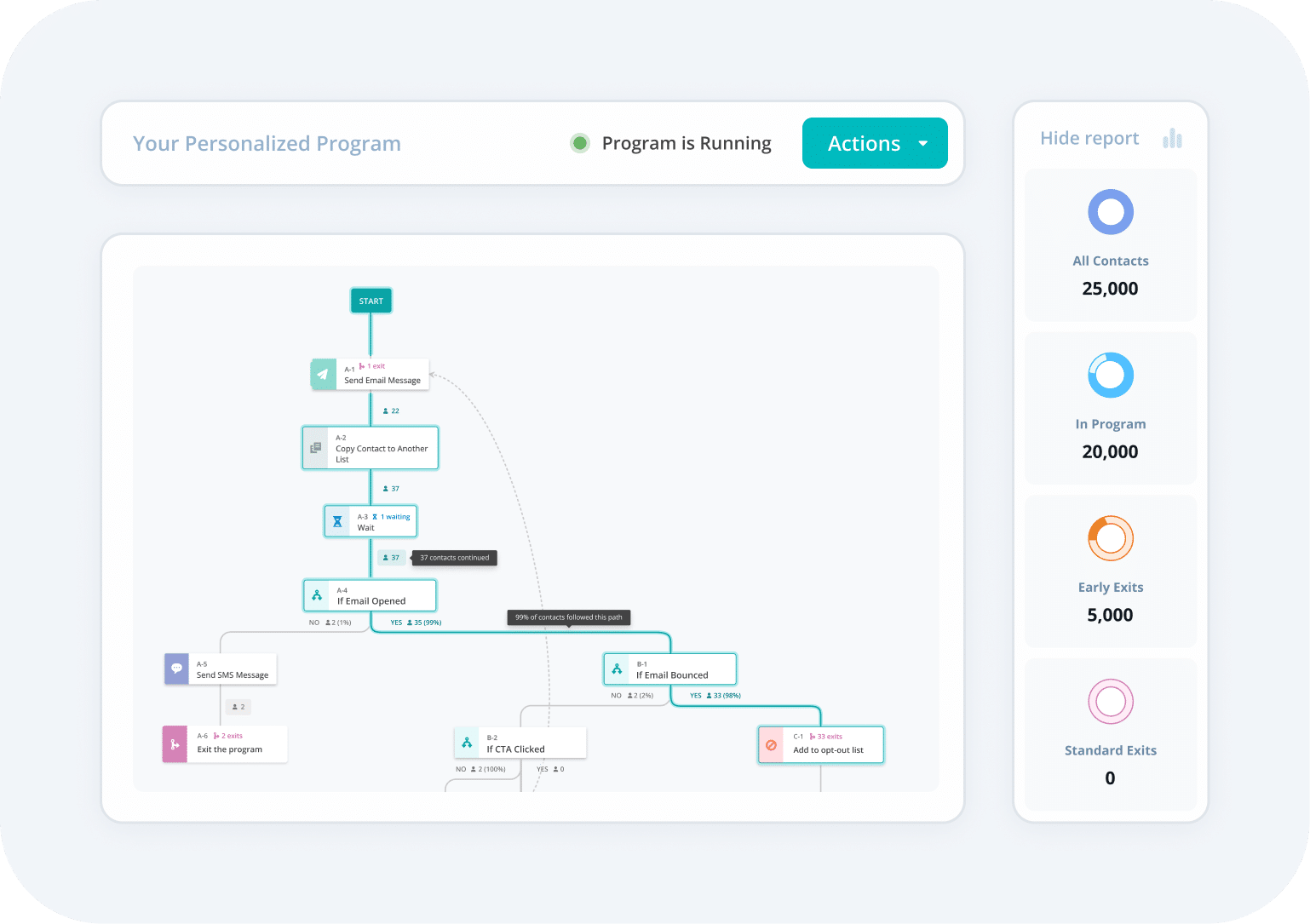

Apply the power of AI, machine learning, and data to build smarter, more personalized multichannel marketing campaigns.

Ease-of-Use

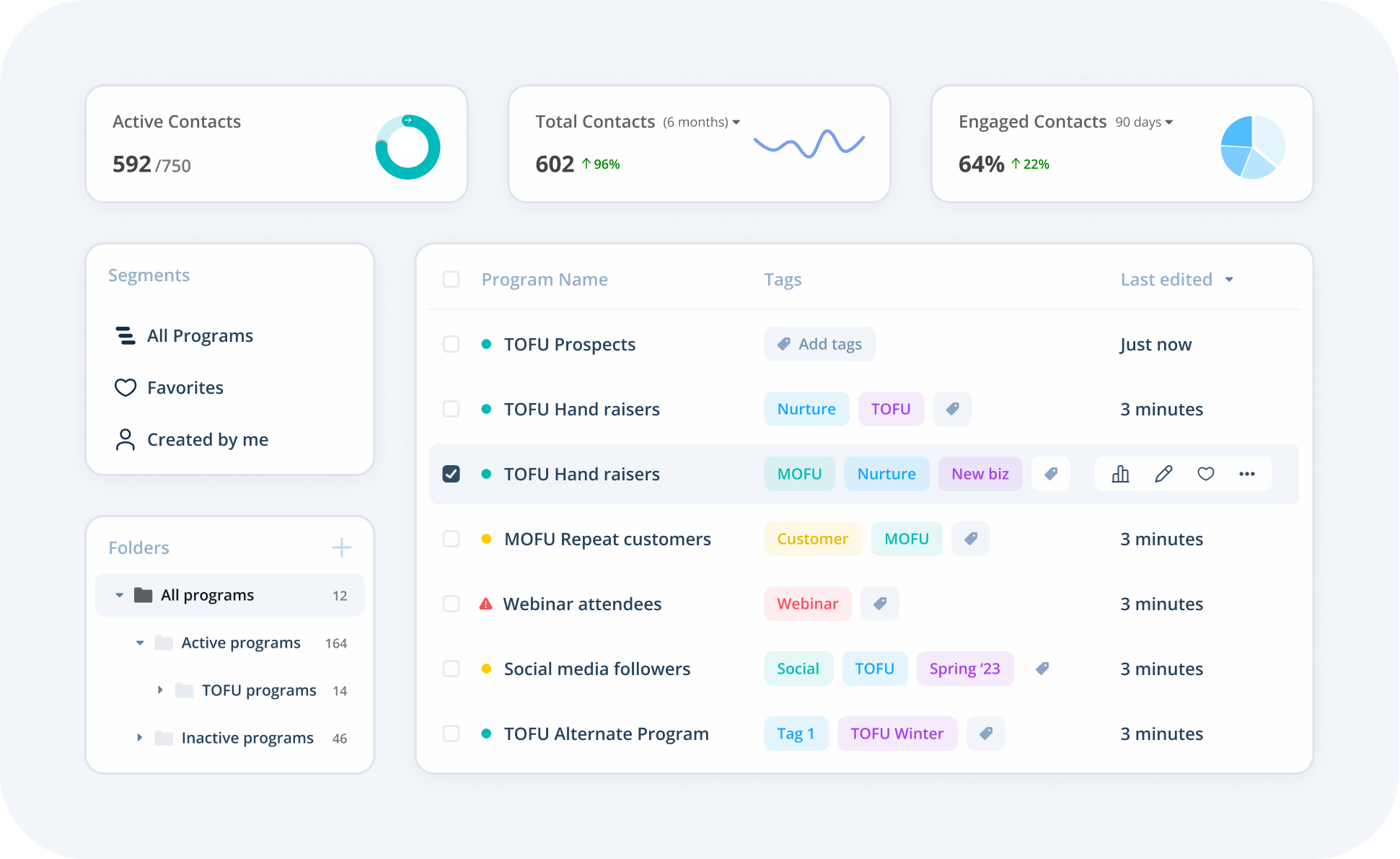

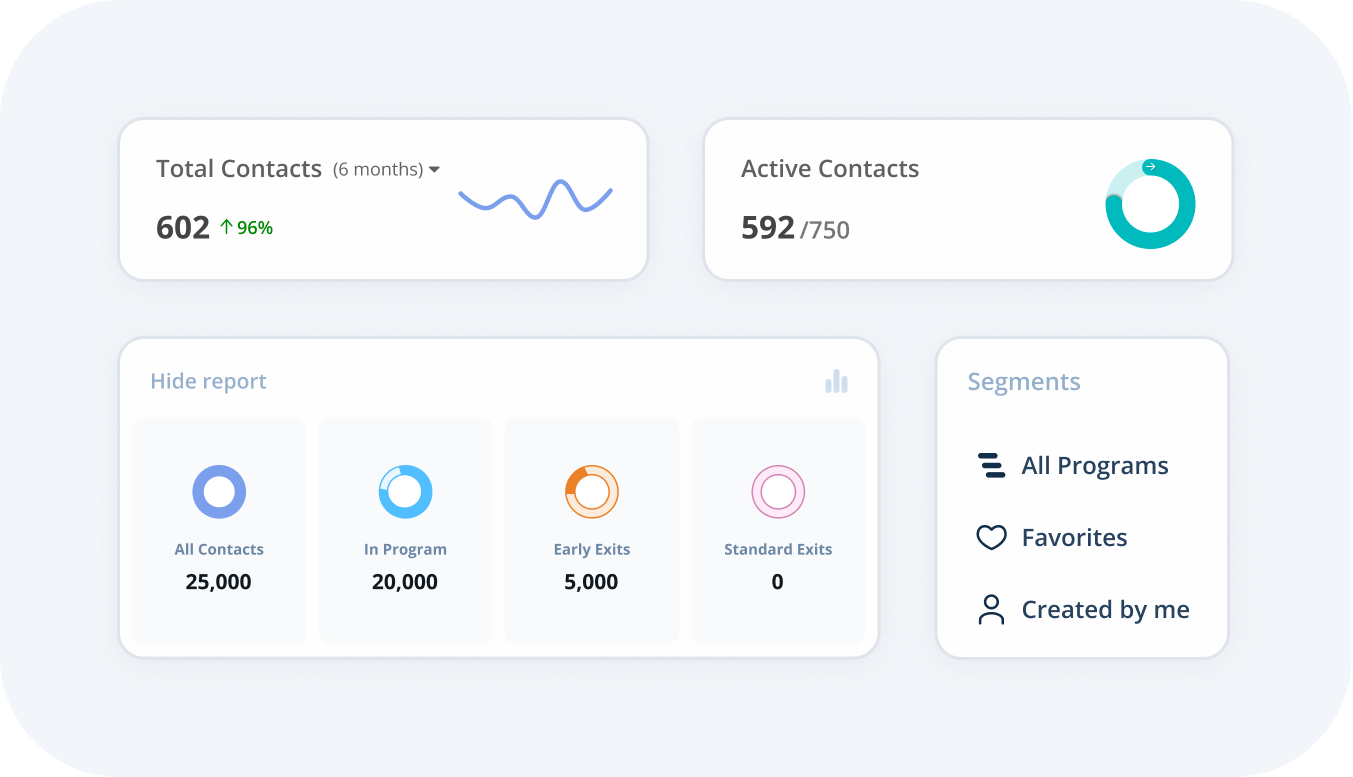

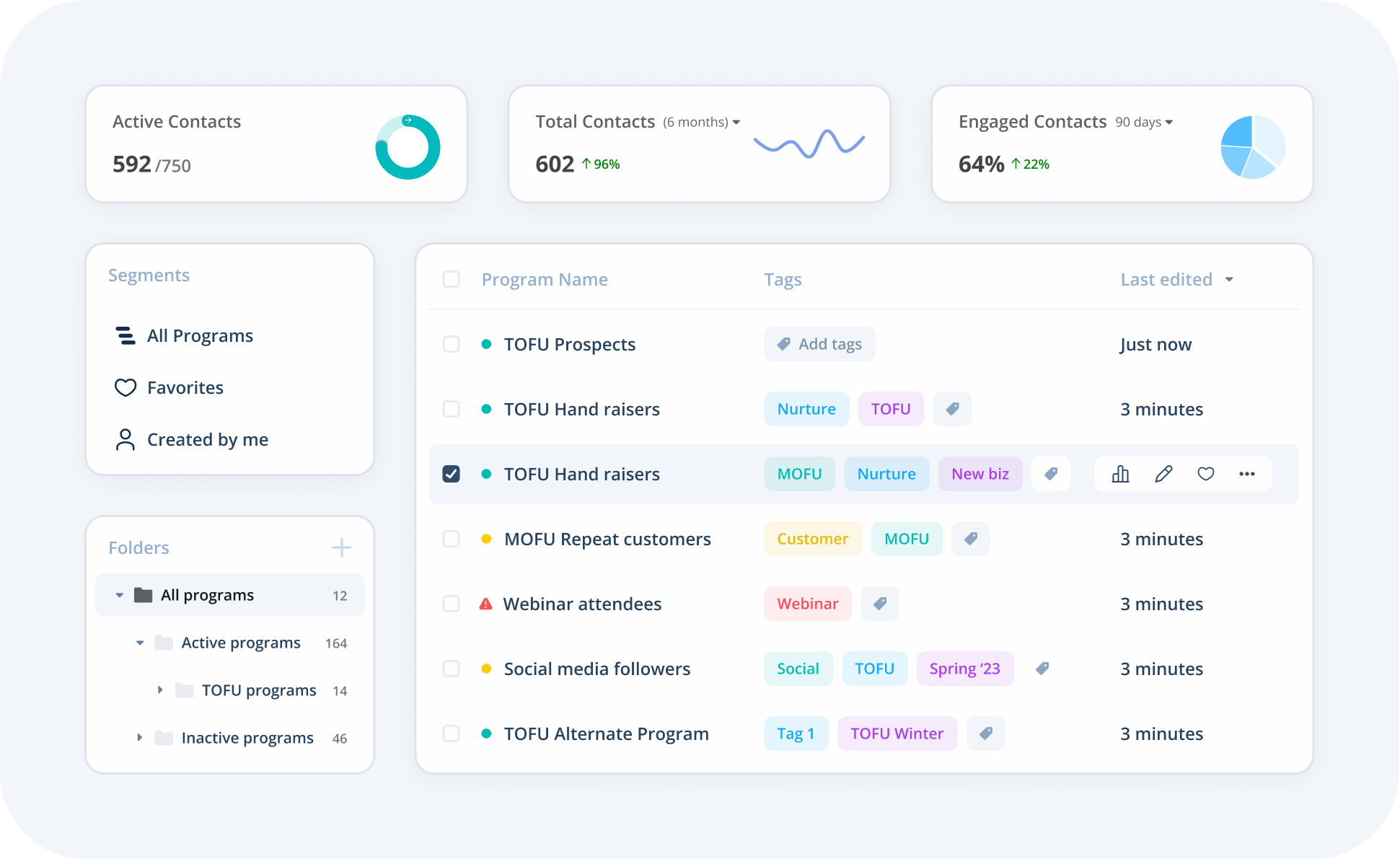

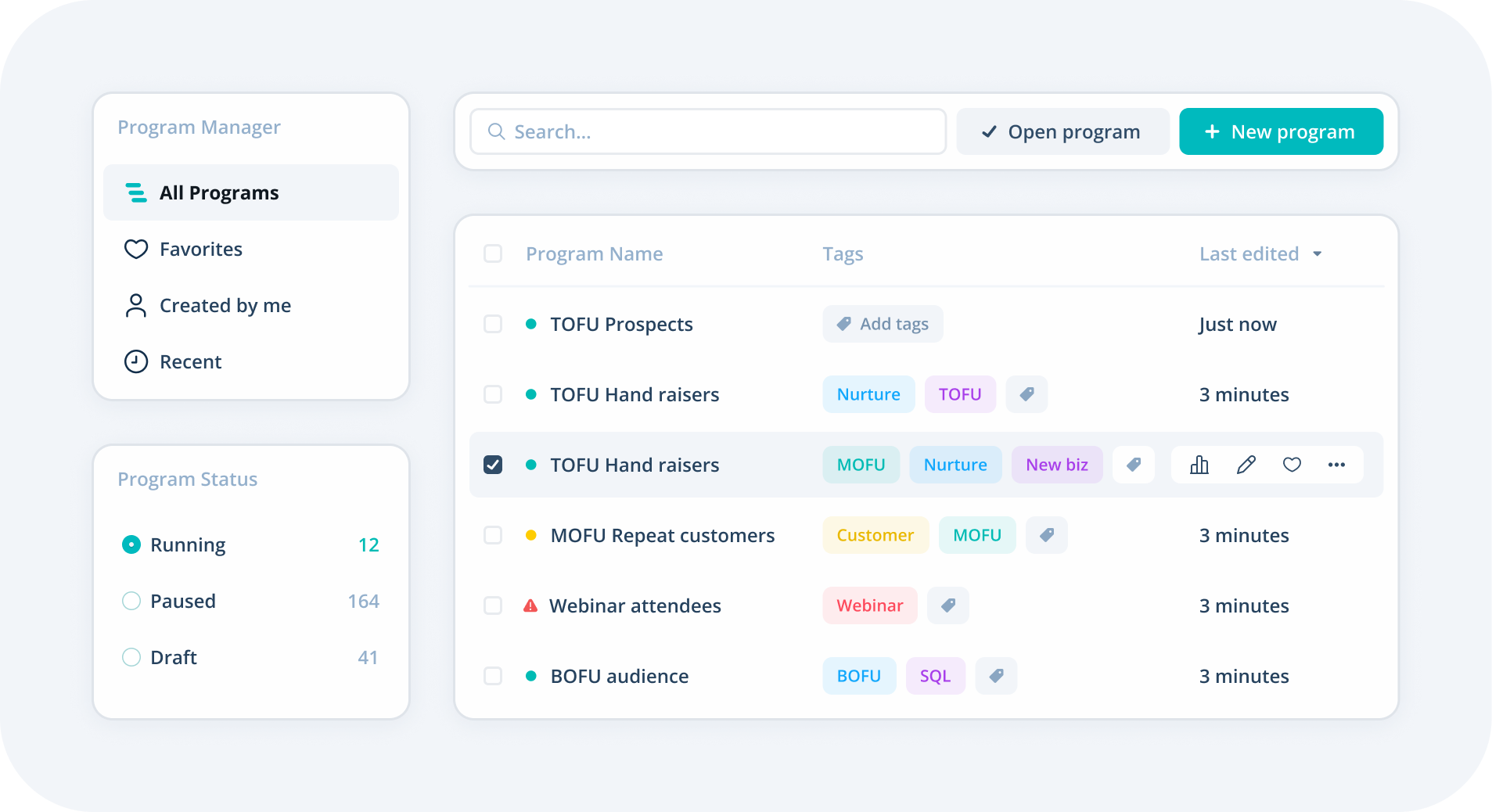

Build and deploy complex, segmented customer journeys easily and quickly with our modern and responsive user interface.

Openness

Use the CRMs and applications you trust, through native, open integrations, without pesky vendor lock-in requirements.

Marketing Automation Solutions

Act-On’s automated marketing platform includes the campaign automation tools you need: lead generation and lead scoring, analytics and metrics, plus support for omnichannel functions like SMS marketing and social media.

We focus on innovative marketing automation services and providing personal customer support. Most other marketing automation companies can’t honestly say that. Probably because they’re actually Big Tech wolves hiding in marketing automation sheep’s clothing: Hi Marketo, Eloqua, Pardot, and Hubspot!

Explore Act-On's Features and Capabilities

Try the Marketing Automation Platform Customers Love Using

Look, we try not to brag, but we have to be honest: Marketers love Act-On.

Maybe it’s our best-in-class customer support?

Or our competitive pricing?

Or the library of

educational assets you need to succeed?

Open APIs Mean Our Marketing Automation Platform Works With Your Favorite Tools

Act-On’s native integrations with popular digital marketing software include:

Successful Companies That Trust Act-On:

63K+

Customer experience automations monthly

4K+

Customers globally

400M+

Behaviors tracked monthly

98%

Satisfaction with Act-On customer support

Meet Act-On's Favorite People: Our Customers

We never lose sight the why behind our marketing automation services: making life easier for marketers and their customers. Act-On’s automated marketing solutions combine the best of both worlds: bleeding edge technological innovation and the kind of personalized customer support that never goes out of style.

What Act-On Customers Have to Say

Act-On gave me the insight I needed to better strategize how we engage our different audience segments.

Kristin Williams

Marketing Program Manager, Physicians Insurance

Executing our email campaigns through Act-On increased our open rates by 68% and our CTR by 25%.

Agata Krzysztofik

Chief Marketing Officer, Simscale

Act-On’s lead scoring has transformed our marketing efforts and allows us to automatically transfer great leads through our CRM.

Sam Saunders

Digital Marketing Manager, DMC Canotec

Through analytics and knowing where our customers are in their journey, we’re able to deliver the most relevant messaging.

Marc Wilensky

VP of Communications and Brand Marketing, Tower Federal Credit Union

Thanks to Act-On, we were able to enhance our marketing strategy and gain valuable insights into customer preferences.

Adam Nutting

Marketing Automation Specialist, Traverse City Tourism

Learn More About Act-On

Explore topics like marketing automation, multichannel marketing, and digital marketing strategy with our comprehensive blogs, ebooks, press releases, podcasts, and more.

ALLDATA

It’s every email marketer’s worst nightmare. A sudden spike in bounce rates. Luckily, ALLDATA had Act-On’s deliverability team on their side.

What is Lead Scoring for Marketing and What Are the Benefits?

Lead scoring helps organizations move prospects along their buying journey in a structured, strategic way — which is especially helpful considering how complex buying journeys have become.

Crafting A Modern Lead Story: Strategies for Effective Lead to Pipeline Conversion

We all know the classic tale: Lead meets brand. They’ve been burned by broken promises before, but this time feels different. The brand’s products solve